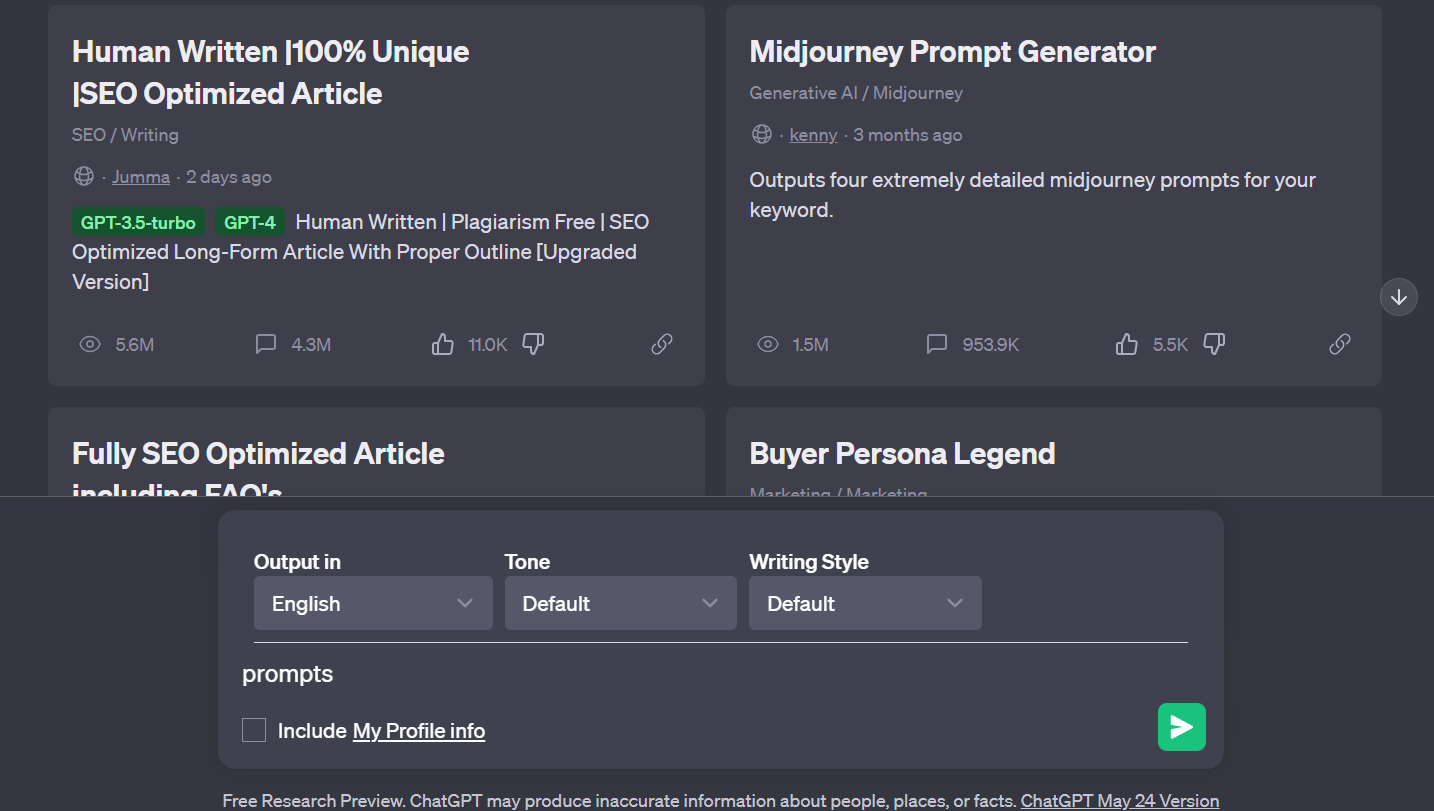

AIPRM Prompts – 2023

In today’s fast-paced digital world, content creation plays a vital role in capturing the attention of readers and driving organic traffic to websites. However, consistently coming up with fresh and engaging ideas can be challenging for content writers. That’s where AIPRM Prompts come in. In this article, we will explore the benefits of using AIPRM…